k nearest neighbor (kNN): how it works

How K-Nearest Neighbors (kNN) Classifier Works

kNN (k-Nearest Neighbors) is a classification algorithm. It is a simple algorithm that stores all available cases and classifies new cases based on a similarity measure (e.g., distance functions).

Algorithm

For a new case to be classified, we calculate the distance between this case and each training sample. And we rank the distance in an ascending way and pick the top-k samples with smallest distance with the new case as its k-nearest neighbour. And this case is classified by a majority vote of its neighbors, with the case being assigned to the class most common amongst its K nearest neighbors. If K = 1, then the case is simply assigned to the class of its nearest neighbor.

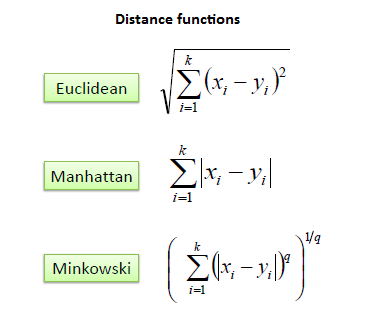

distance

It should also be noted that all three distance measures are valid for continuous variables. In the instance of categorical variables the Hamming distance must be used.

Say we have a categorical attribute gender and its domain is $\{male, female\}$. And for a new case $x$ and its gender is $male$. For all the training sample, we calculate the gender distance between each sample and the new case. If their gender is same, then the distance is 0; else the distance is 1.

normalization

Because the distance of categorical attribute is either 0 or 1, standardization of the numerical variables between 0 and 1 is needed when there is a mixture of numerical and categorical variables in the dataset.

K choice

Choosing the optimal value for K is best done by first inspecting the data. In general, a large K value is more precise as it reduces the overall noise but there is no guarantee. Cross-validation is another way to retrospectively determine a good K value by using an independent dataset to validate the K value. Historically, the optimal K for most datasets has been between 3-10. That produces much better results than 1NN.

Example1

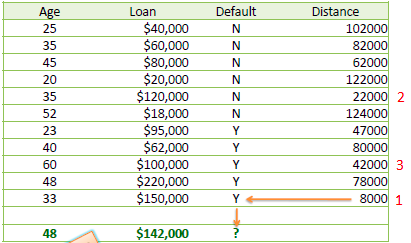

Say we have the training sample database concerning credit default as follows, Age and Loan are two numerical variables (predictors) and Default is the target.

| Age | Loan | Default |

|---|---|---|

| 25 | $40,000 | N |

| 35 | $60,000 | N |

| 45 | $80,000 | N |

| 20 | $20,000 | N |

| 35 | $120,000 | N |

| 52 | $18,000 | N |

| 23 | $95,000 | Y |

| 40 | $62,000 | Y |

| 60 | $100,000 | Y |

| 48 | $220,000 | Y |

| 33 | $150,000 | Y |

Now we have an unknown case (Age=48 and Loan=$142,000). And we want to predict the credit default for this case using Euclidean distance. So using the following formula to calculate the distance:

We can get the following:

With k=3, there are two Default=Y and one Default=N out of the three closest neighbors. So the prediction for this case is Default=Y.

Example2

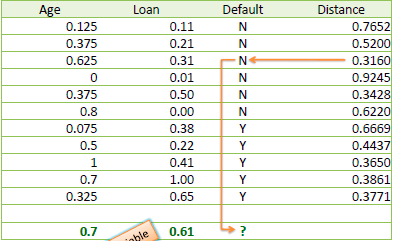

One major drawback in calculating distance measures directly from the training set is in the case where variables have different measurement scales or there is a mixture of numerical and categorical variables. For example, if one variable is based on annual income in dollars, and the other is based on age in years then income will have a much higher influence on the distance calculated. One solution is to standardize the training set as shown below.

Implementation

|

|