Keras使用技巧。

TODO

- [ ] LSTM

- [ ] CONV1D

- [ ] TIMEDISTRIBUTED-VIDEOS

- [ ] FIT_GENERATOR

- [ ] STATEFUL_RNN

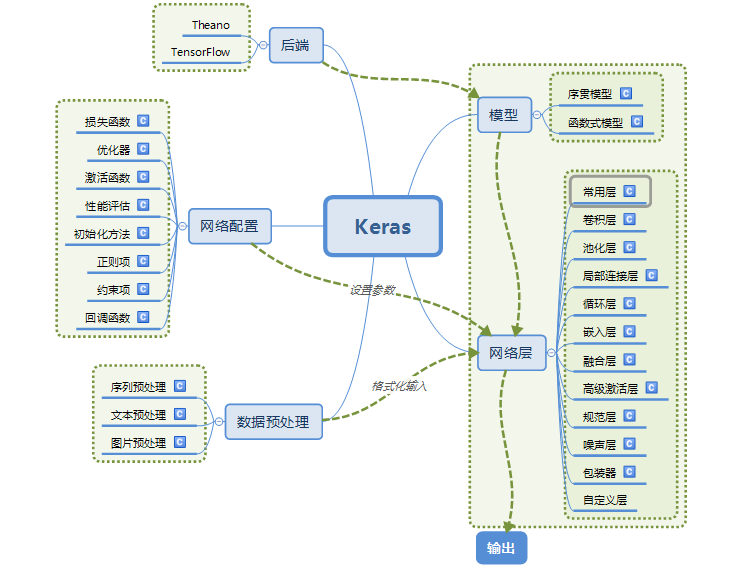

Keras模块结构

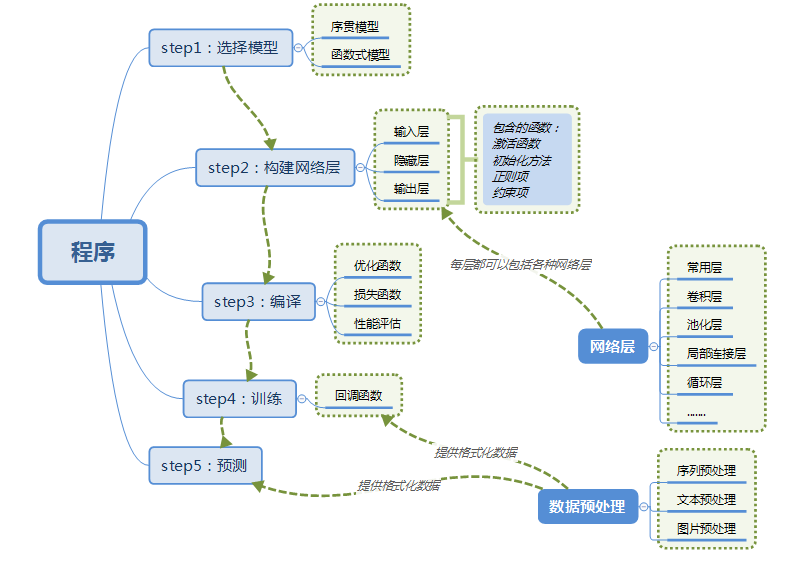

Keras构建神经网络

Models

There are two ways to implement a keras model, Sequential and Model. We will use an image classification example to see how these ways work and their corresponding properties.

We use MNIST hand-written recognition as the example. We will implement a three-layers convolution network, i.e., input_layer -> conv2d -> conv2d -> dense -> output_layer

|

|

Attributes

model.layers: return a flattened list of the layers comprising the model.

|

|

model.inputs: return the list of input tensors of the model.

|

|

model.outputs: return the list of output tensors of the model.

|

|

model.summary: prints a summary representation of the model.

|

|

model.get_weights(): return a list of all weights tensors as Numpy arrays, where weights are stored in model.get_weights()[0] and bias are stored in model.get_weights()[2].

|

|

Model subclassing

In addition to these two types of models, you may create your own fully-customizable models by subclassing the Model class and implementing your own forward pass in the call method.

|

|

In call, you may specify custom losses by calling self.add_loss(loss_tensor) (like you would in a custom layer).

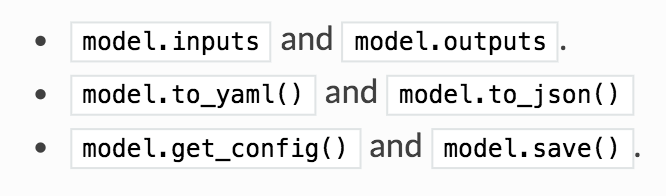

But in subclassed models, the following methods and attributes are not available:

Model class API

Methods

compile

|

|

fit

|

|

- verbose: Integer. 0, 1, or 2. Verbosity mode. 0 = silent, 1 = progress bar, 2 = one line per epoch.

- A

Historyobject. ItsHistory.historyattribute is a record of training loss values and metrics values at successive epochs, as well as validation loss values and validation metrics values (if applicable).

evaluate

|

|

Returns scalar test loss (if the model has a single output and no metrics) or list of scalars (if the model has multiple outputs and/or metrics). The attribute

model.metrics_nameswill give you the display labels for the scalar outputs.Computation is done in batches.

- verbose: 0 or 1. Verbosity mode. 0 = silent, 1 = progress bar.

predict

|

|

- verbose: Verbosity mode, 0 or 1.

train_on_batch

|

|

- Scalar training loss (if the model has a single output and no metrics) or list of scalars (if the model has multiple outputs and/or metrics). The attribute

model.metrics_nameswill give you the display labels for the scalar outputs.- Runs a single gradient update on a single batch of data.

test_on_batch

|

|

- Test the model on a single batch of samples.

- Scalar test loss (if the model has a single output and no metrics) or list of scalars (if the model has multiple outputs and/or metrics). The attribute

model.metrics_nameswill give you the display labels for the scalar outputs.

predict_on_batch

|

|

fit_generator

|

|

Return a

Historyobject. ItsHistory.historyattribute is a record of training loss values and metrics values at successive epochs, as well as validation loss values and validation metrics values (if applicable).generator: A generator or an instance of

Sequence(keras.utils.Sequence) object in order to avoid duplicate data when using multiprocessing. The output of the generator must be either

- a tuple

(inputs, targets)- a tuple

(inputs, targets, sample_weights).This tuple (a single output of the generator) makes a single batch. Therefore, all arrays in this tuple must have the same length (equal to the size of this batch). Different batches may have different sizes. For example, the last batch of the epoch is commonly smaller than the others, if the size of the dataset is not divisible by the batch size. The generator is expected to loop over its data indefinitely. An epoch finishes when

steps_per_epochbatches have been seen by the model.

|

|

evaluate_generator

|

|

Evaluates the model on a data generator.

Scalar test loss (if the model has a single output and no metrics) or list of scalars (if the model has multiple outputs and/or metrics). The attribute

model.metrics_nameswill give you the display labels for the scalar outputs.

predict_generator

|

|

get_layer

|

|

Retrieves a layer based on either its name (unique) or index.

If

nameandindexare both provided,indexwill take precedence.Indices are based on order of horizontal graph traversal (bottom-up).

Return a layer instance.

Layers

Properties

Get weights

|

|

Get input/output/shape

Whenever you are calling a layer on some input, you are creating a new tensor (the output of the layer), and you are adding a “node” to the layer, linking the input tensor to the output tensor. When you are calling the same layer multiple times, that layer owns multiple nodes indexed as 0, 1, 2…

In previous versions of Keras, you could obtain the output tensor of a layer instance via layer.get_output(), or its output shape via layer.output_shape. You still can (except get_output() has been replaced by the property output). But what if a layer is connected to multiple inputs?

As long as a layer is only connected to one input, there is no confusion, and .output will return the one output of the layer:

|

|

If we have multiple inputs:

|

|

The same is true for the properties input_shape and output_shape: as long as the layer has only one node, or as long as all nodes have the same input/output shape, then the notion of “layer output/input shape” is well defined, and that one shape will be returned by layer.output_shape/layer.input_shape. But if, for instance, you apply the same Conv2D layer to an input of shape (32, 32, 3), and then to an input of shape (64, 64, 3), the layer will have multiple input/output shapes, and you will have to fetch them by specifying the index of the node they belong to:

|

|

Obtain output of an intermediate layer

There are two ways to do it. The first one is to create a new model that outputs the layers we want.

|

|

Alternatively, we can build a Keras function that will return the output of a certain layer given a certain input, for example:

|

|

Core Layers

Dense()

|

|

- It implements the fully-connected multiplication operation:

output=activation(dot(input,kernel)+bias), whereactivationis the element-wise activation function passed as theactivationargument,kernelis a weights matrix andbiasis a bias vector. - The input’s shape should be like

(batch_size,dim), otherwise, useFlatten()layer to reshape the input. - The output shape is

(batch_size,units)

Flatten()

|

|

- Flattens the input. Does not affect the batch size.

Input()

|

|

- It is used to instantiate a Keras tensor.

- shape: A shape tuple (integer), not including the batch size. For instance,

shape=(32,)indicates that the expected input will be batches of 32-dimensional vectors.

Reshape()

|

|

- target_shape: target shape. Tuple of integers. Does not include the batch axis. Support shape inference using

-1as dimension. - The shape of output is like

(batch_size,)+target_shape

Permute()

|

|

- Permutes the dimensions of the input according to a given pattern.

RepeatVector()

|

|

- Repeats the input n times. Input: 2D tensor of shape

(num_samples, features). output shape: 3D tensor of shape(num_samples, n, features).

Conv2D()

|

|

- 2D convolution layer (e.g. spatial convolution over images).

- This layer creates a convolution kernel that is convolved with the layer input to produce a tensor of outputs.

Conv2DTranspose()

|

|

- Transposed convolution layer (sometimes called Deconvolution).

UpSampling2D()

|

|

- Repeats the rows and columns of the data by size[0] and size[1] respectively.

- input shape

(batch_size,rows,cols,channels),

ZeroPadding2D()

|

|

- This layer can add rows and columns of zeros at the top, bottom, left and right side of an image tensor.

MaxPooling2D()

|

|

- If None, it will default to

pool_size. - input shape

(batch_size, rows, cols, channels), output shape(batch_size, pooled_rows, pooled_cols, channels)

AveragePooling2D()

|

|

- input shape

(batch_size, rows, cols, channels), output shape(batch_size, pooled_rows, pooled_cols, channels)

GlobalMaxPooling2D()

|

|

- input shape

(batch_size, rows, cols, channels), output shape(batch_size, channels)

GlobalAveragePooling2D()

|

|

- input shape

(batch_size, rows, cols, channels), output shape(batch_size, channels)

ImageProcessing

ImageDataGenerator class

In order to make the most of training datasets, somethimes we need to augment the training dataset via a number of random transformations, so that our model can never see the exact same pictures but at the same time we can expand our trianing dataset. This helps prevent overfitting and helps the model generalize better.

|

|

In Keras this can be done via the keras.preprocessing.image.ImageDataGenerator class. This class allows you to:

- configure random transformations and normalization operations to be done on your image data during training

- instantiate generators of augmented image batches (and their labels) via

.flow(data, labels)or.flow_from_directory(directory). These generators can then be used with the Keras model methods that accept data generators as inputs,fit_generator,evaluate_generatorandpredict_generator.

Let’s go over these parameters quickly by performing these operations on an image.

rotation_rangeis a value in degrees (0-180), a range within which to randomly rotate pictures. ==========

==========

width_shift_rangeFloat, 1-D array-like or int ==========

==========

height_shift_range ==========

==========

shear_range: Float. Shear Intensity (Shear angle in counter-clockwise direction in degrees) ===========

===========

zoom_range: Float or [lower, upper]. Range for random zoom. If a float,[lower, upper] = [1-zoom_range, 1+zoom_range] ===========

===========

**horizontal_flip**Boolean. Randomly flip inputs horizontally. ==========

==========

vertical_flipBoolean. Randomly flip inputs vertically. =========

=========

fill_modeis the strategy used for filling in newly created pixels, which can appear after a rotation or a width/height shift.

|

|

Methods

apply_transform

fit

|

|

Once we create the ImageDataGenerator and define the data augment parameters, we can use fit to augment the existing training dataset.

- x: Sample data. Should have rank 4, i.e.,

shape=(sample_num, height, width, channel). In case of grayscale data, the channels axis should have value 1, in case of RGB data, it should have value 3, and in case of RGBA data, it should have value 4.

flow

|

|

Takes data & label arrays, generates batches of augmented data.

- x: Input data. Numpy array of rank 4 or a tuple. If tuple, the first element should contain the images and the second element another numpy array or a list of numpy arrays that gets passed to the output without any modifications. Can be used to feed the model miscellaneous data along with the images. In case of grayscale data, the channels axis of the image array should have value 1, in case of RGB data, it should have value 3, and in case of RGBA data, it should have value 4.

- y: Labels.

- batch_size: Int (default: 32).

- shuffle: Boolean (default: True).

- sample_weight: Sample weights.

- seed: Int (default: None).

- save_to_dir: None or str (default: None). This allows you to optionally specify a directory to which to save the augmented pictures being generated (useful for visualizing what you are doing).

- save_prefix: Str (default:

''). Prefix to use for filenames of saved pictures (only relevant ifsave_to_diris set). - save_format: one of “png”, “jpeg” (only relevant if

save_to_diris set). Default: “png”. - subset: Subset of data (

"training"or"validation") ifvalidation_splitis set inImageDataGenerator.

Return

An Iterator yielding tuples of (x, y) where x is a numpy array of image data (in the case of a single image input) or a list of numpy arrays (in the case with additional inputs) and y is a numpy array of corresponding labels. If ‘sample_weight’ is not None, the yielded tuples are of the form (x, y, sample_weight). If y is None, only the numpy array x is returned.

|

|

flow_from_directory

|

|

Takes the path to a directory & generates batches of augmented data.

directory: string, path to the target directory. It should contain one subdirectory per class. Any PNG, JPG, BMP, PPM or TIF images inside each of the subdirectories directory tree will be included in the generator. See this script for more details.

target_size: Tuple of integers

(height, width), default:(256, 256). The dimensions to which all images found will be resized.color_mode: One of “grayscale”, “rgb”, “rgba”. Default: “rgb”. Whether the images will be converted to have 1, 3, or 4 channels.

classes: Optional list of class subdirectories (e.g.

['dogs', 'cats']). Default: None. If not provided, the list of classes will be automatically inferred from the subdirectory names/structure underdirectory, where each subdirectory will be treated as a different class (and the order of the classes, which will map to the label indices, will be alphanumeric). The dictionary containing the mapping from class names to class indices can be obtained via the attributeclass_indices.class_mode

: One of “categorical”, “binary”, “sparse”, “input”, or None. Default: “categorical”. Determines the type of label arrays that are returned:

- “categorical” will be 2D one-hot encoded labels,

- “binary” will be 1D binary labels, “sparse” will be 1D integer labels,

- “input” will be images identical to input images (mainly used to work with autoencoders).

- If None, no labels are returned (the generator will only yield batches of image data, which is useful to use with

model.predict_generator()). Please note that in case of class_mode None, the data still needs to reside in a subdirectory ofdirectoryfor it to work correctly.

batch_size: Size of the batches of data (default: 32).

shuffle: Whether to shuffle the data (default: True) If set to False, sorts the data in alphanumeric order.

seed: Optional random seed for shuffling and transformations.

save_to_dir: None or str (default: None). This allows you to optionally specify a directory to which to save the augmented pictures being generated (useful for visualizing what you are doing).

save_prefix: Str. Prefix to use for filenames of saved pictures (only relevant if

save_to_diris set).save_format: One of “png”, “jpeg” (only relevant if

save_to_diris set). Default: “png”.follow_links: Whether to follow symlinks inside class subdirectories (default: False).

subset: Subset of data (

"training"or"validation") ifvalidation_splitis set inImageDataGenerator.interpolation: Interpolation method used to resample the image if the target size is different from that of the loaded image. Supported methods are

"nearest","bilinear", and"bicubic". If PIL version 1.1.3 or newer is installed,"lanczos"is also supported. If PIL version 3.4.0 or newer is installed,"box"and"hamming"are also supported. By default,"nearest"is used.

RETURN

A DirectoryIterator yielding tuples of (x, y) where x is a numpy array containing a batch of images with shape (batch_size, *target_size, channels) and y is a numpy array of corresponding labels.

|

|

Example of transforming images and masks together.

|

|

Since we only have few examples, our number one concern should be overfitting. Overfitting happens when a model exposed to too few examples learns patterns that do not generalize to new data, i.e. when the model starts using irrelevant features for making predictions. For instance, if you, as a human, only see three images of people who are lumberjacks, and three, images of people who are sailors, and among them only one lumberjack wears a cap, you might start thinking that wearing a cap is a sign of being a lumberjack as opposed to a sailor. You would then make a pretty lousy lumberjack/sailor classifier.

Data augmentation is one way to fight overfitting, but it isn’t enough since our augmented samples are still highly correlated. Your main focus for fighting overfitting should be the entropic capacity of your model —how much information your model is allowed to store. A model that can store a lot of information has the potential to be more accurate by leveraging more features, but it is also more at risk to start storing irrelevant features. Meanwhile, a model that can only store a few features will have to focus on the most significant features found in the data, and these are more likely to be truly relevant and to generalize better.

There are different ways to modulate entropic capacity. The main one is the choice of the number of parameters in your model, i.e. the number of layers and the size of each layer. Another way is the use of weight regularization, such as L1 or L2 regularization, which consists in forcing model weights to taker smaller values.

In our case we will use a very small convnet with few layers and few filters per layer, alongside data augmentation and dropout. Dropout also helps reduce overfitting, by preventing a layer from seeing twice the exact same pattern, thus acting in a way analoguous to data augmentation (you could say that both dropout and data augmentation tend to disrupt random correlations occuring in your data).

|

模型保存

方法一

模型保存

|

|

模型载入

|

|

方法二

|

|

Keras-Callback

ModelCheckpoint

Checkpoint Neural Network Model Improvements

Checkpoint is an approach where a snapshot of the state of the system is taken in case of system failure. If there is a problem, not all is lost. The checkpoint may be used directly, or used as the starting point for a new run, picking up where it left off. When training deep learning models, the checkpoint is the weights of the model. These weights can be used to make predictions as is, or used as the basis for ongoing training.

The ModelCheckpoint callback class allows you to define where to checkpoint the model weights, how the file should named and under what circumstances to make a checkpoint of the model. The API allows you to specify which metric to monitor, such as loss or accuracy on the training or validation dataset. You can specify whether to look for an improvement in maximizing or minimizing the score. Finally, the filename that you use to store the weights can include variables like the epoch number or metric. The ModelCheckpoint can then be passed to the training process when calling the fit() function on the model.

Note, you may need to install the h5py library to output network weights in HDF5 format.

Checkpointing is setup to save the network weights only when there is an improvement in classification accuracy on the validation dataset (monitor=’val_acc’ and mode=’max’). The weights are stored in a file that includes the score in the filename (weights-improvement-{val_acc=.2f}.hdf5).

|

|

Running the code:

|

|

Loading a Check-Pointed Neural Network Model

Now that you have seen how to checkpoint your deep learning models during training, you need to review how to load and use a checkpointed model.

In the example below, the model structure is known and the best weights are loaded from the previous experiment, stored in the working directory in the weights.best.hdf5 file.

The model is then used to make predictions on the entire dataset.

|

|

LearningRateScheduler

Adapting the learning rate for your stochastic gradient descent optimization procedure can increase performance and reduce training time. The simplest and perhaps most used adaptation of learning rate during training are techniques that reduce the learning rate over time. These have the benefit of making large changes at the beginning of the training procedure when larger learning rate values are used, and decreasing the learning rate such that a smaller rate and therefore smaller training updates are made to weights later in the training procedure.

Two popular and easy to use learning rate schedules are as follows:

- Decrease the learning rate gradually based on the epoch.

- Decrease the learning rate using punctuated large drops at specific epochs.

Next, we will look at how you can use each of these learning rate schedules in turn with Keras.

Time-Based Learning Rate Schedule

Keras has a time-based learning rate schedule built in.

The stochastic gradient descent optimization algorithm implementation in the SGD class has an argument called decay. This argument is used in the time-based learning rate decay schedule equation as follows:

|

|

When the decay argument is zero (the default), this has no effect on the learning rate.

When the decay argument is specified, it will decrease the learning rate from the previous epoch by the given fixed amount.

For example, if we use the initial learning rate value of 0.1 and the decay of 0.001, the first 5 epochs will adapt the learning rate as follows:

|

|

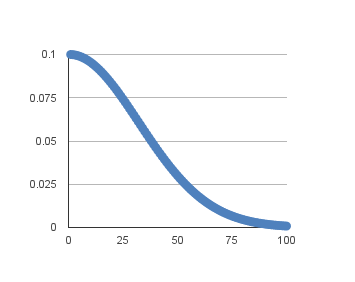

Extending this out to 100 epochs will produce the following graph of learning rate (y axis) versus epoch (x axis):

You can create a nice default schedule by setting the decay value as follows:

|

|

The example below demonstrates using the time-based learning rate adaptation schedule in Keras. The learning rate for stochastic gradient descent has been set to a higher value of 0.1. The model is trained for 50 epochs and the decay argument has been set to 0.002, calculated as 0.1/50. Additionally, it can be a good idea to use momentum when using an adaptive learning rate. In this case we use a momentum value of 0.8.

|

|

Drop-Based Learning Rate Schedule

Another popular learning rate schedule used with deep learning models is to systematically drop the learning rate at specific times during training.

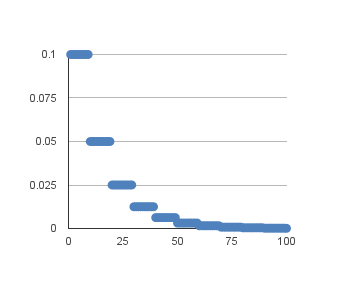

Often this method is implemented by dropping the learning rate by half every fixed number of epochs. For example, we may have an initial learning rate of 0.1 and drop it by 0.5 every 10 epochs. The first 10 epochs of training would use a value of 0.1, in the next 10 epochs a learning rate of 0.05 would be used, and so on.

If we plot out the learning rates for this example out to 100 epochs you get the graph below showing learning rate (y axis) versus epoch (x axis).

We can implement this in Keras using a the LearningRateScheduler callback when fitting the model.

The LearningRateScheduler callback allows us to define a function to call that takes the epoch number as an argument and returns the learning rate to use in stochastic gradient descent. When used, the learning rate specified by stochastic gradient descent is ignored.

In the code below, we use the same example before of a single hidden layer network on the Ionosphere dataset. A new step_decay() function is defined that implements the equation:

|

|

Where InitialLearningRate is the initial learning rate such as 0.1, the DropRate is the amount that the learning rate is modified each time it is changed such as 0.5, Epoch is the current epoch number and EpochDrop is how often to change the learning rate such as 10.

Notice that we set the learning rate in the SGD class to 0 to clearly indicate that it is not used. Nevertheless, you can set a momentum term in SGD if you want to use momentum with this learning rate schedule.

|

|

History

History callback records training metrics for each epoch. This includes the loss and the accuracy (for classification problems) as well as the loss and accuracy for the validation dataset, if one is set. The history object is returned from calls to the fit() function used to train the model. Metrics are stored in a dictionary in the history member of the object returned.

For example, you can list the metrics collected in a history object using the following snippet of code after a model is trained:

|

|

For example, for a model trained on a classification problem with a validation dataset, this might produce the following listing:

|

|

Visualize Model Training History in Keras

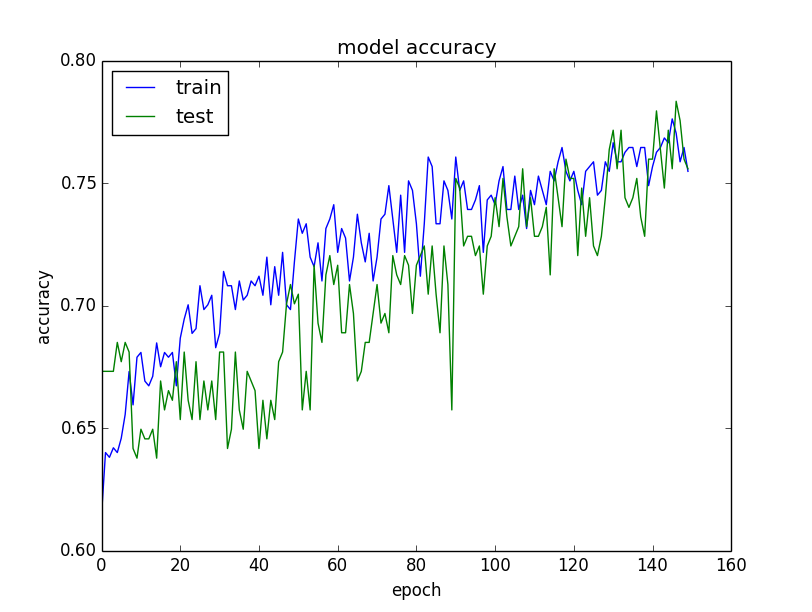

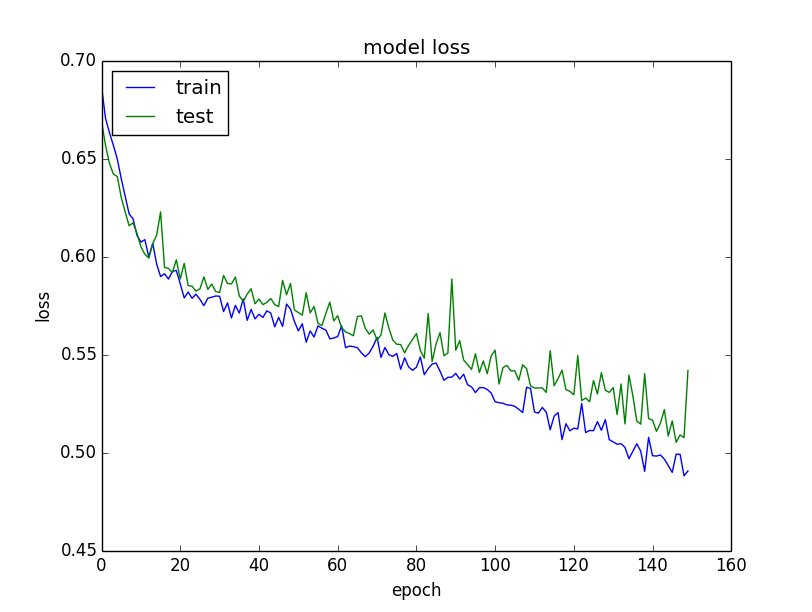

The example collects the history, returned from training the model and creates two charts:

- A plot of accuracy on the training and validation datasets over training epochs.

- A plot of loss on the training and validation datasets over training epochs.

|

|

The plots are provided below. The history for the validation dataset is labeled test by convention as it is indeed a test dataset for the model.

From the plot of accuracy we can see that the model could probably be trained a little more as the trend for accuracy on both datasets is still rising for the last few epochs. We can also see that the model has not yet over-learned the training dataset, showing comparable skill on both datasets.

From the plot of loss, we can see that the model has comparable performance on both train and validation datasets (labeled test). If these parallel plots start to depart consistently, it might be a sign to stop training at an earlier epoch.

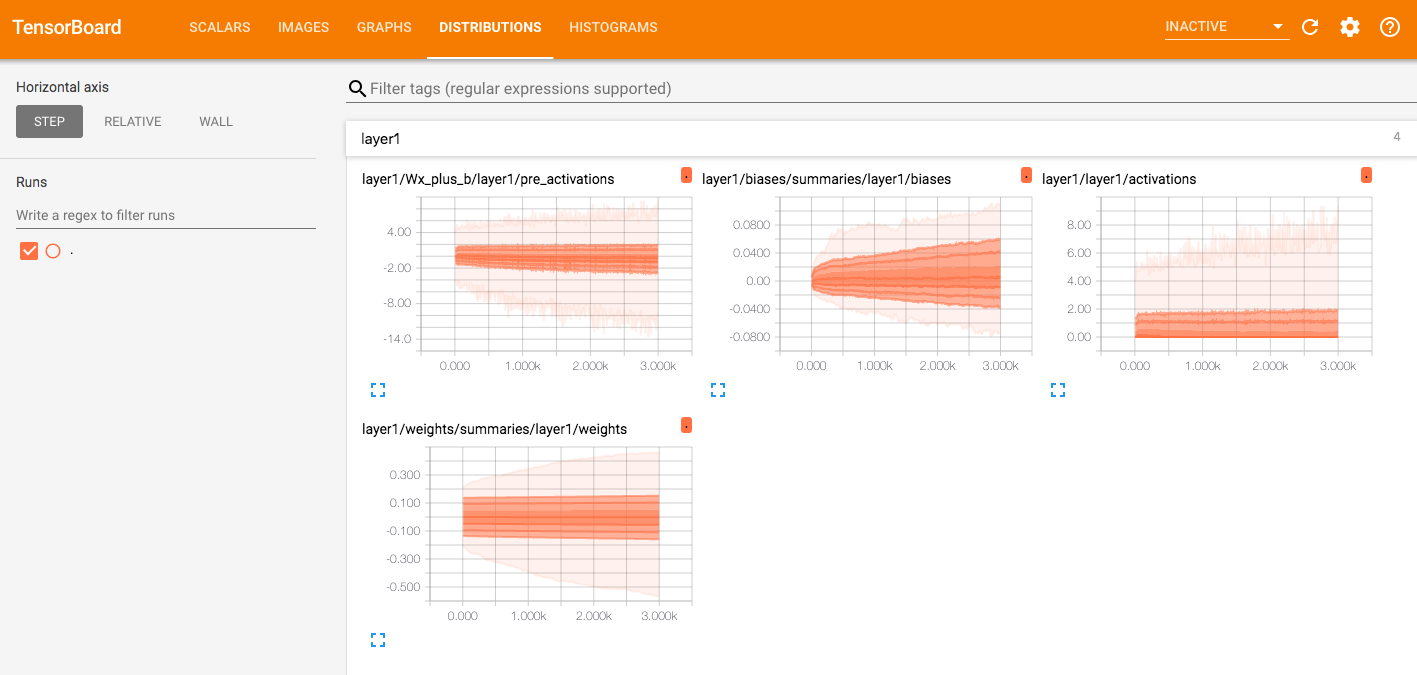

Tensorboard

Visualizing Neuron Weights During Training 如何在使用train_on_batch时候调用tensorboard

|

|

Tensorflow

|

|

Customerize Optimizer

The following example is an example of modifing the implementation of Adam optimizer, so that it can support differential privacy.

|

|

Feature Extraction

VGG Feature Loss

|

|

Fine tune ref

Print Gradients ref

|

|

Then in the main function:

|

|

HyperparametersSearching

|

|